Data Analytics

Traditionally, performing big data analytics meant a lot of heavy lifting in terms of coding and manual analysis. Modern tools and analysis techniques incorporate AI to enhance every aspect of analytics by automating processes, enabling advanced techniques, improving accuracy and efficiency, and generating insights and recommended actions.

1) Define your business objectives for analytics, such as identifying promising new markets or weak production processes.

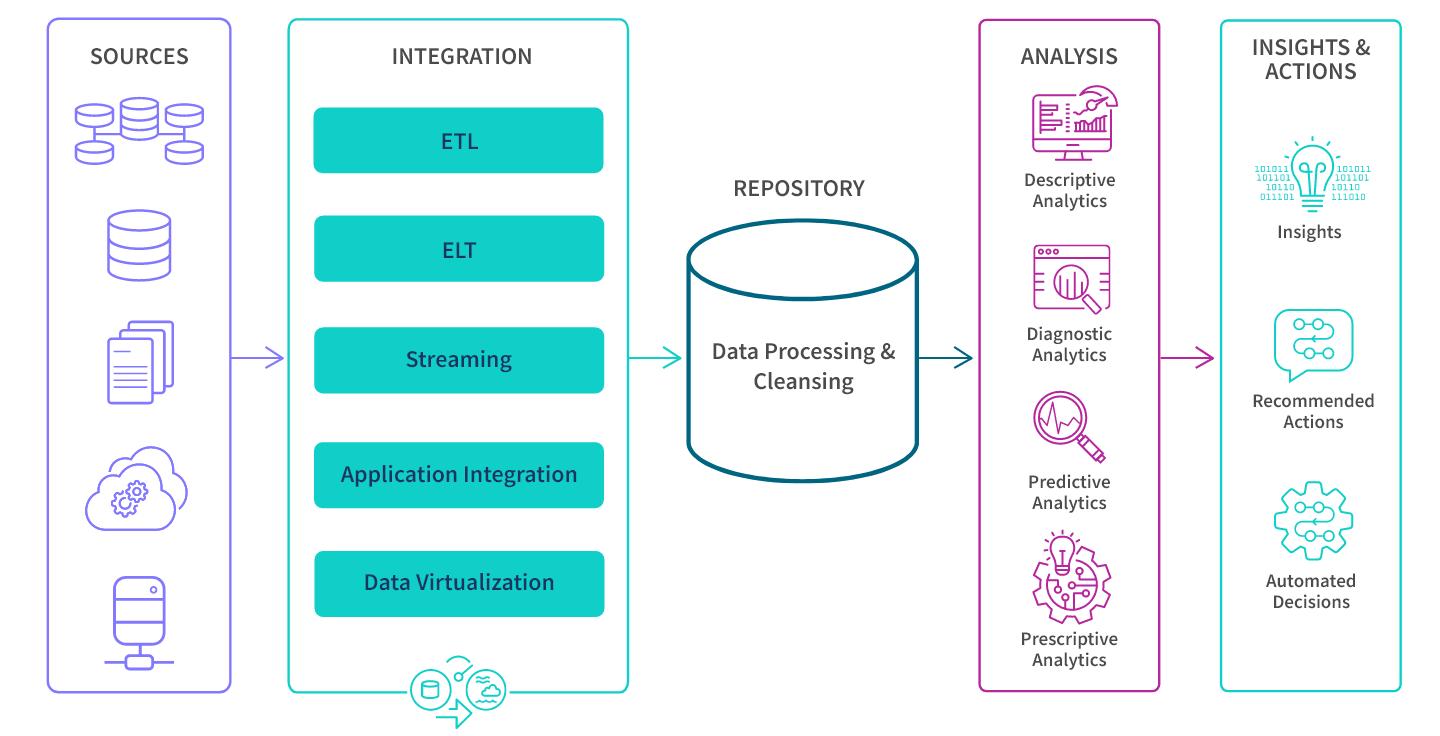

2) Identify the data sources you’ll need - systems such as transactional, supply chain, social media, and CRM applications. This can be historical data or real-time streaming data.

3) Integrate your data into a repository such as a data warehouse or data lake, typically in the cloud. This data integration process of extracting, transforming, and aggregating raw, unstructured data gives you a comprehensive, unified view of your business and facilitates efficient data retrieval and analysis. AI algorithms used by data engineers and found in modern tools improve the data quality and efficiency of data collection and preparation by cleaning up errors such as duplications, redundancies, and formatting issues. There are five different approaches:

An ETL pipeline is a traditional type of data pipeline which converts raw data to match the target system via three steps: extract, transform and load. Data is transformed in a staging area before it is loaded into the target repository (typically a data warehouse). This allows for fast and accurate data analysis in the target system and is most appropriate for small datasets which require complex transformations.

The more modern ELT pipeline has the data loaded immediately and then transformed within the target system, typically a cloud-based data lake, data warehouse or data lakehouse. This approach is more appropriate when datasets are large and timeliness is important, since loading is often quicker. ELT operates either on a micro-batch or change data capture (CDC) timescale.

Data streaming moves data continuously in real-time from source to target. Modern data integration platforms can deliver analytics-ready data into streaming and cloud platforms, data warehouses, and data lakes.

Application integration (API) allows separate applications to work together by moving and syncing data between them. Various applications usually have unique APIs for giving and taking data so SaaS application automation tools can help you create and maintain native API integrations efficiently and at scale.

Data virtualization also delivers data in real time, but only when it is requested by a user or application. Still, this can create a unified view of data and makes data available on demand by virtually combining data from different systems. Virtualization and streaming are well suited for transactional systems built for high performance queries.

4) Perform data analysis to find hidden patterns, trends, and valuable insights from large datasets. Your goal here is to not only answer specific hypotheses but discover new questions and unanticipated insights by exploring the data.

5) Gain insights and trigger actions in other systems by integrating your analytics software into other applications. You can embed analytical capabilities directly into software applications, letting users access and analyze data within the context of the application they’re already using. Your analytics tool can also be set to trigger real-time alerts to help you stay on top of your business and take timely action. For example, when churn risk spikes, an automated email campaign would be automatically triggered in your marketing platform, offering personalized retention incentives.

Link nội dung: https://loptienganh.edu.vn/analytics-data-a69123.html